Direct IO for predictable performance

About direct IO, I almost learned all from the project of foyer.

Direct I/O is widely adopted in database and storage systems because it bypasses the page cache, allowing the application to manage memory directly for improved performance.

However, for most systems that are not constrained by CPU or I/O bottlenecks, buffered I/O is generally sufficient and simpler to use.

That said, Direct I/O may not be suitable for the majority of systems, but today, I’d like to introduce how and why it can be beneficial in big data shuffle systems. Let’s dive in!

Motivation

The issue described in PR #19 highlights the limitations of buffered I/O in Apache Uniffle.

Without Direct I/O, performance can become unstable when the operating system flushes the page cache to disk under memory pressure. This behavior introduces high latency in RPC calls that fetch data from memory or local disk.

In the context of shuffle-based storage systems, such as Uniffle, the flush block size is typically large (e.g., 128MB). Uniffle also leverages large in-memory buffers to accelerate data processing. Given this design, relying on the system’s page cache offers little benefit and can even be counterproductive.

Therefore, Direct I/O is a better fit for this scenario.

Requirements

- Having enough memory to maintain alignment memory blocks pool for your system

- Having the Rust knowledge

- Having big enough data(MB+) into the disk

Just do it

Rust standard library of direct flag

This article focuses exclusively on Linux systems. Fortunately, Direct I/O can be enabled directly using standard system libraries, without the need for third-party dependencies.

Here is an example of how to enable Direct I/O in code:

let path = "/tmp/";

let mut opts = OpenOptions::new();

opts.create(true).write(true);

#[cfg(target_os = "linux")]

{

use std::os::unix::fs::OpenOptionsExt;

opts.custom_flagsO_DIRECT;

}

let file = opts.open(path)?;

file.write_at(data, offset)?;

file.sync_all()?;

Read

let mut file = File::open(path)?;

#[cfg(target_family = "unix")]

use std::os::unix::fs::FileExt;

// read_size indicated the size of read

// read_buf is to fill the read data

// read_offset indicates the reading start position

let read_size = file.read_at(&mut read_buf[..], read_offset)?;

That's all, so easy, right?

Alignment of 4K to write and read

However, a critical detail is not shown in the code example above: all read/write buffers used with Direct I/O must be aligned to 4KB boundaries. This means a buffer size like 16KB is valid, but 15KB is not. This requirement stems from the underlying disk hardware, which only accepts properly aligned I/O buffers.

If you want to write only 1KB of data using Direct I/O, you need to follow these steps:

- Allocate a 4KB-aligned buffer in a contiguous memory region (e.g., using posix_memalign).

- Copy your 1KB of data into the beginning of this buffer (starting at offset 0).

- Use Direct I/O APIs (as shown in the previous example) to write the full 4KB buffer to disk.

This ensures compatibility with the hardware constraints and avoids undefined behavior.

Ways to handle different sizes write and read

For different operations to handle 4K alignment as follows

Read with 5K (>4K)

- Creating the 8K buffer

- Reading

- Remove the tail 3K data for invoking side

Read with 3K

- Creating the 4K buffer

- Reading

- Remove the tail 1K data

Write with 5K

- Creating the 8K buffer

- Write the data into disk

Attention: when reading, you must to filter out the extra data that is the cost.

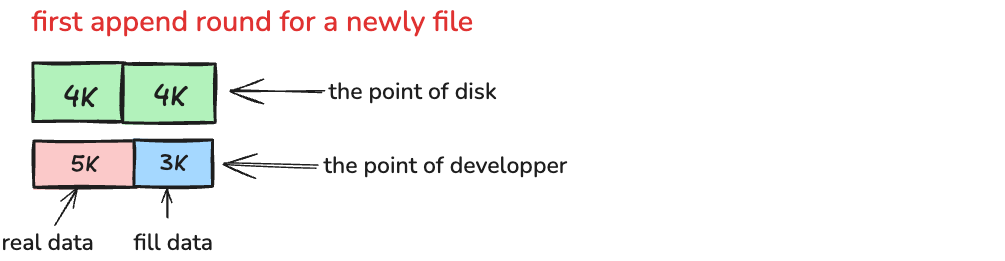

Append with 5K in multi times

For the first round that the file haven't any data

- Creating the 8K buffer

- Write the data into disk

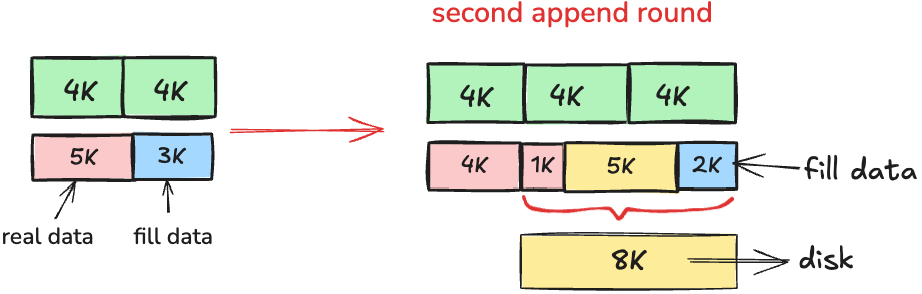

For the second round with 5K that the file has data

- Reading the tail of 1K (5K - 4K) data from disk (that is written by the last round writing)

- Use the step1's 1K + 5K = 6K to align with 4K, so you have to use the 4K * 2 = 8K buffer, creating it

- Append the data

Alignment memory buffer pool

Above the examples, we know we have to request the continuous memory region. If the machine don't have the enough memory, the page fault will occur.

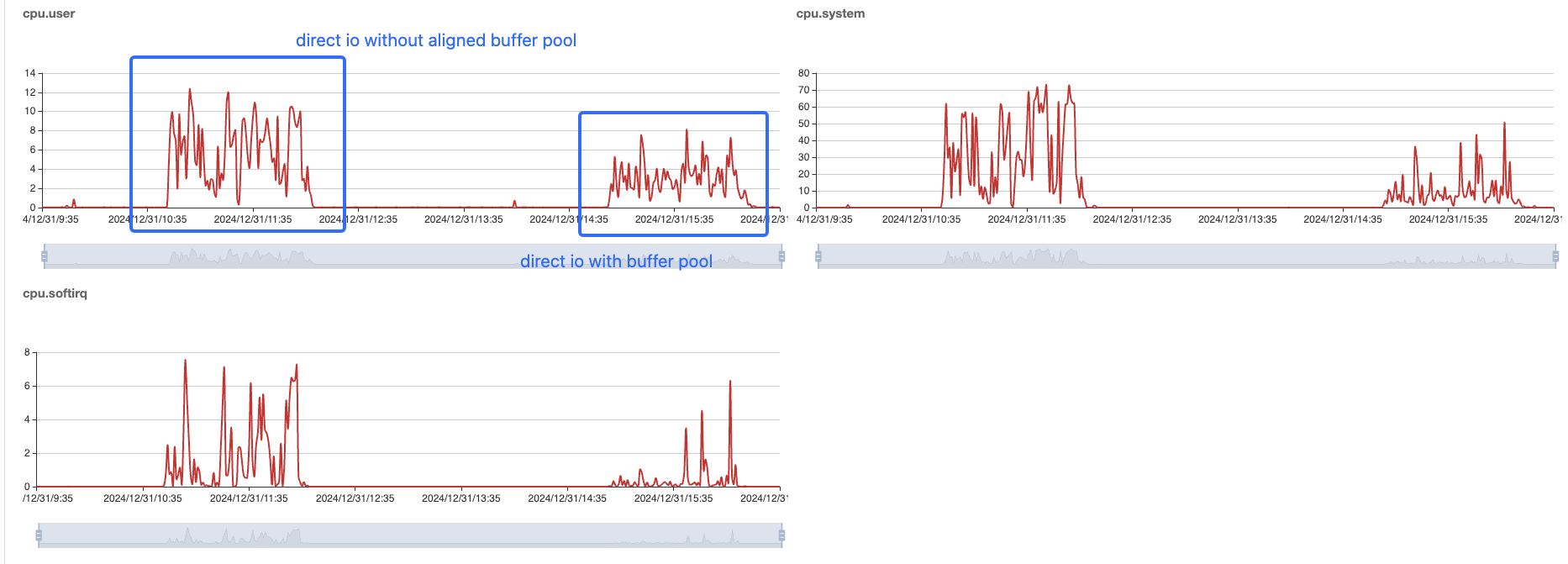

for the direct io, we have to self manage the align buffer and disk block, if you don't use aligned buffer pool, the page fault will occur frequently, that will also make system load high. (this has be shown in the above screenshot)

For the project of riffle, I created total 4 * 1024 / 16 number buffers and one buffer has 16M.

Let's see the metrics of machine with and without buffer pool.

The cpu system user load is lower than the previous load without buffer pool, because the previous case cost too much time on request memory.

Small but effective optimization trick

When flushing the 10G big data into the file, you can split this operation into multi append operations to avoid requesting too large continuous memory region, that will burden the system load and slow down service.

Performance

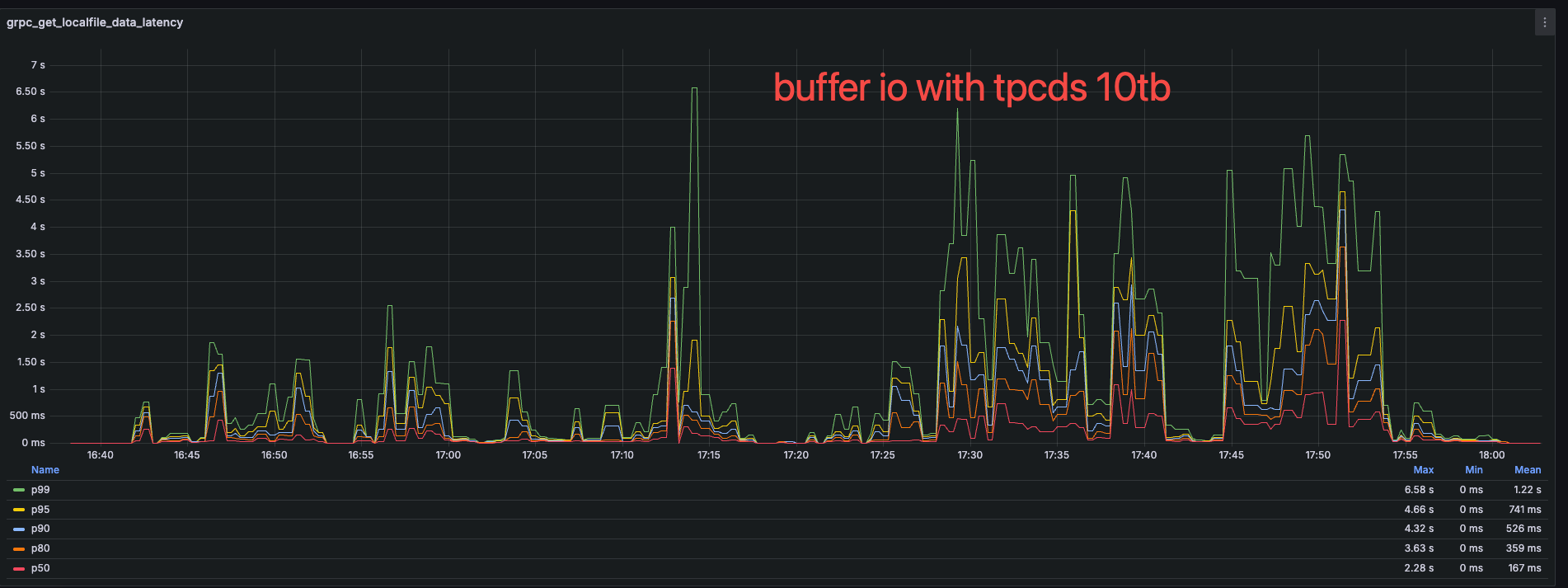

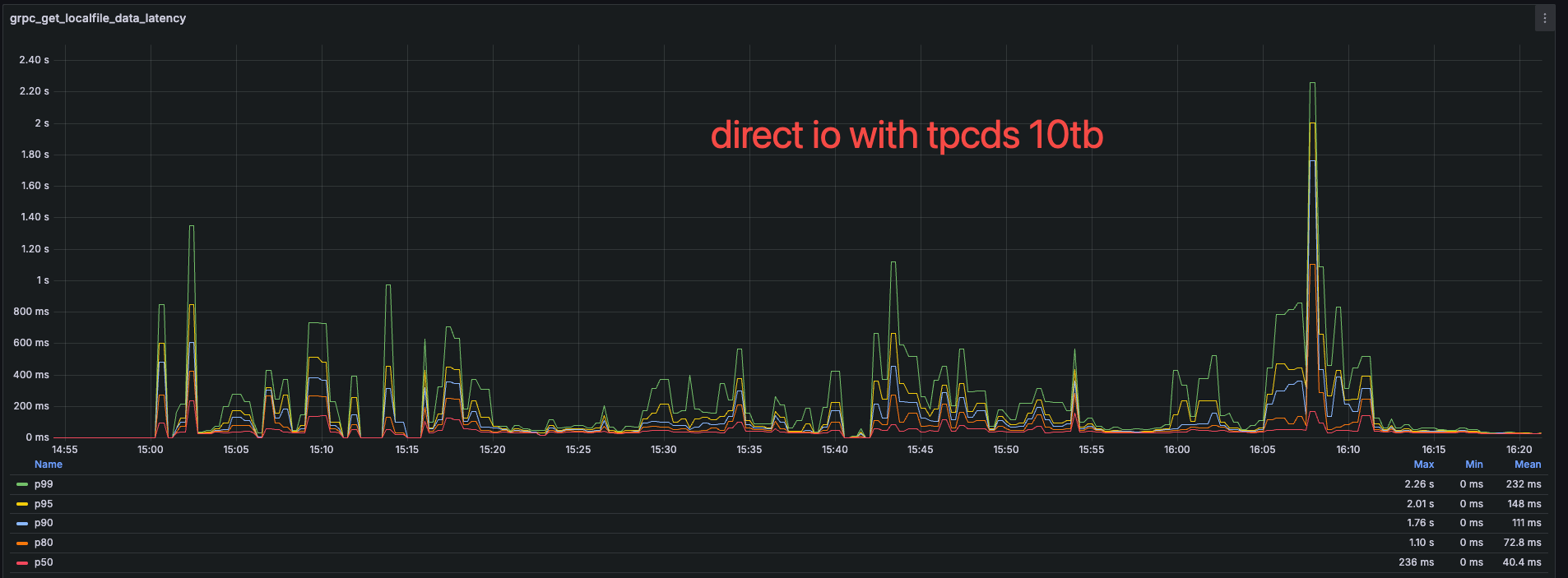

The RPC latency of getting data from local disk

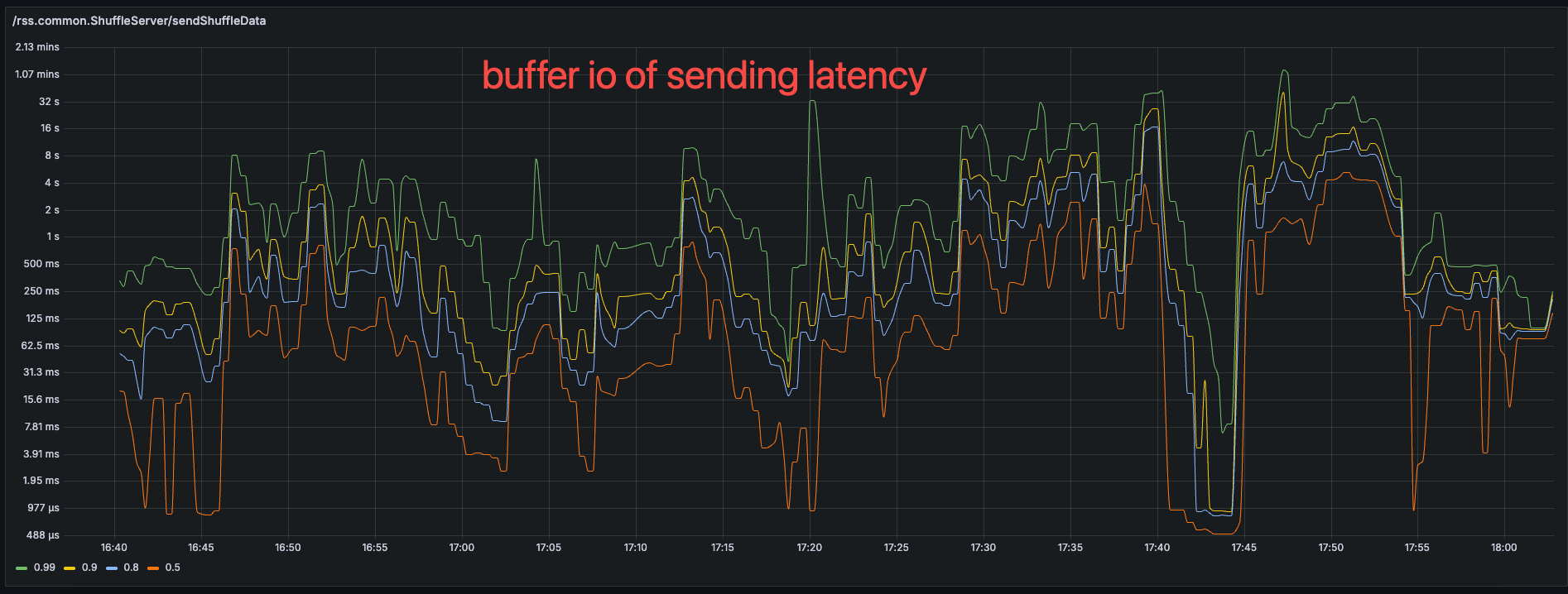

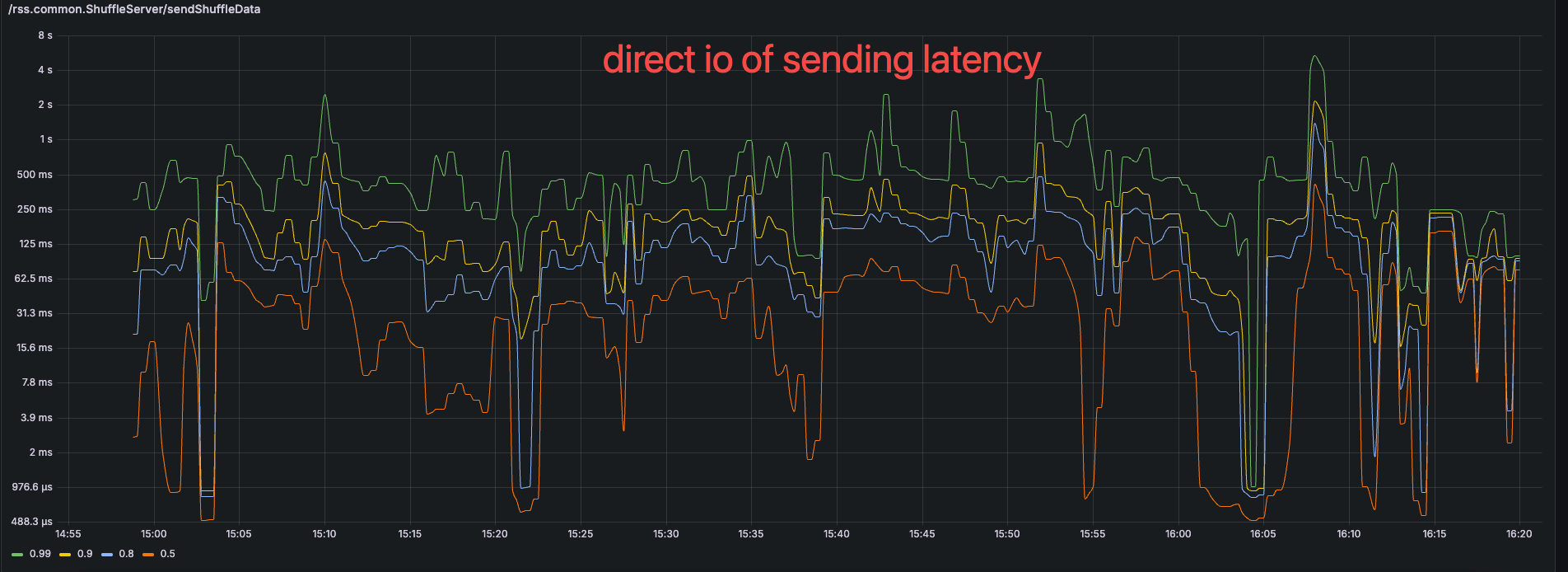

The RPC latency of sending data to memory

Due to the lower system load, the sending data latency on direct io will be more slower than the buffer IO.

Now, it looks great!